TA-llm-command-scoring is a Splunk Technology Add-on that provides a custom streaming command designed specifically for evaluating command-line arguments (CLAs) from process events. It leverages large language models to assess the likelihood that a given CLA is malicious, assigning a simple, interpretable score.

This add-on isn’t a general-purpose AI chatbot or prompt interface. It doesn’t aim to replace Splunk’s | ai prompt=<prompt> command from MLTK v5.6. Instead, it's a purpose-built, lightweight assistant focused solely on scrutinizing CLAs—a specialized tool to help SOC analysts cut through noise and surface risky executions fast.

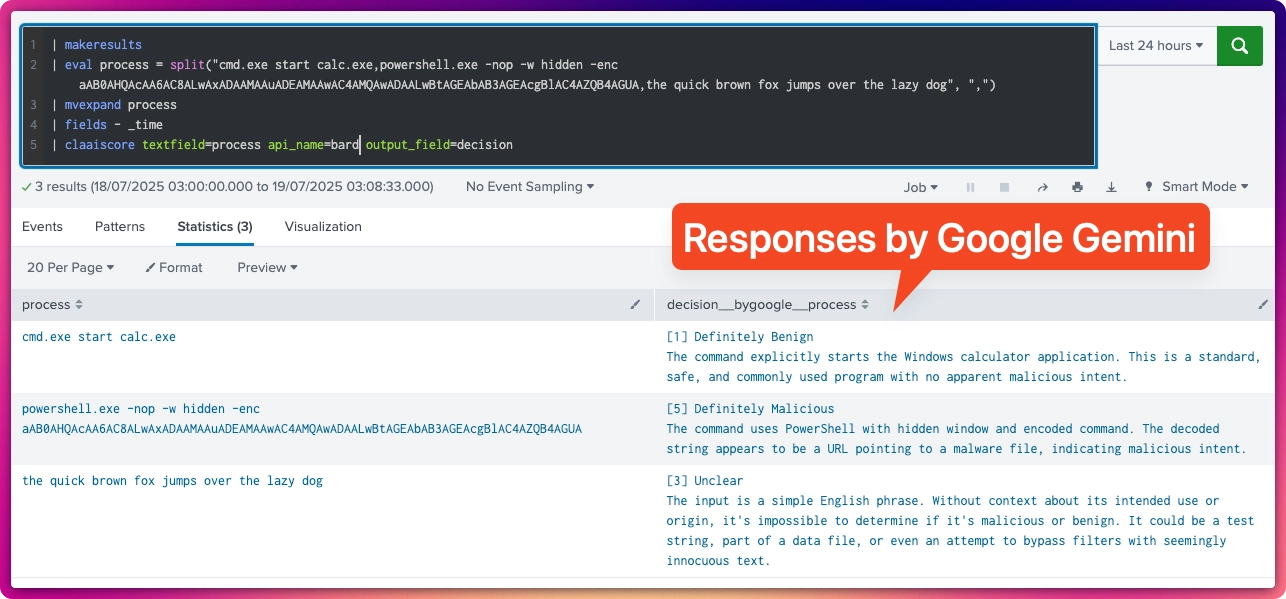

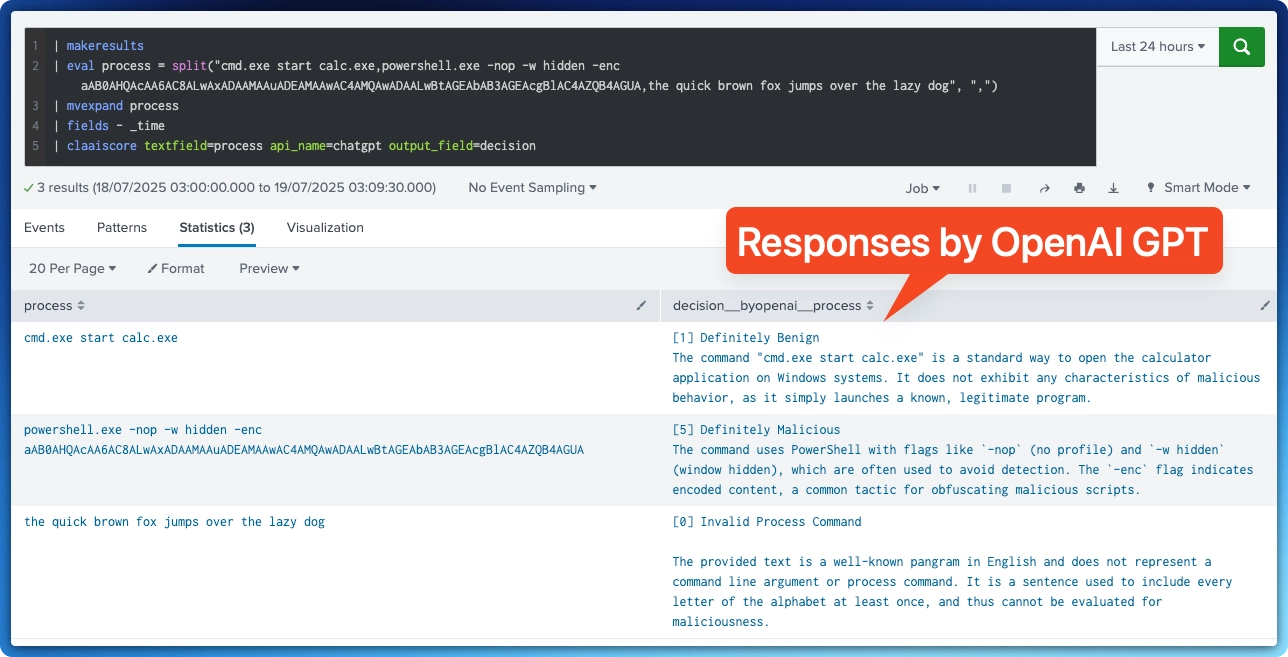

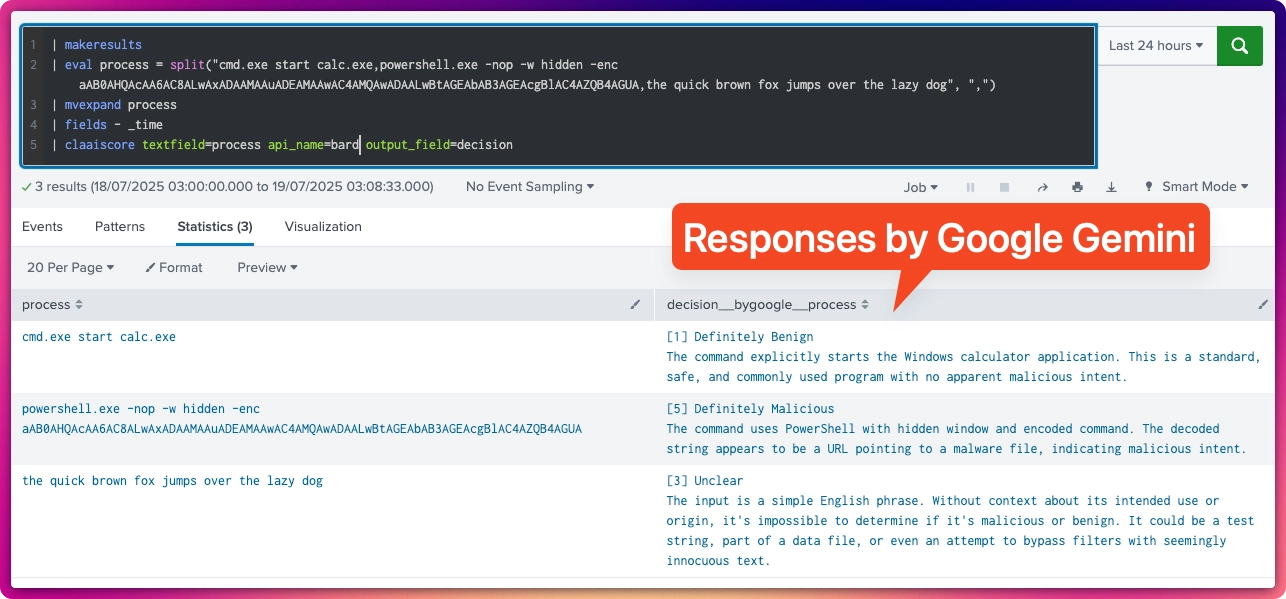

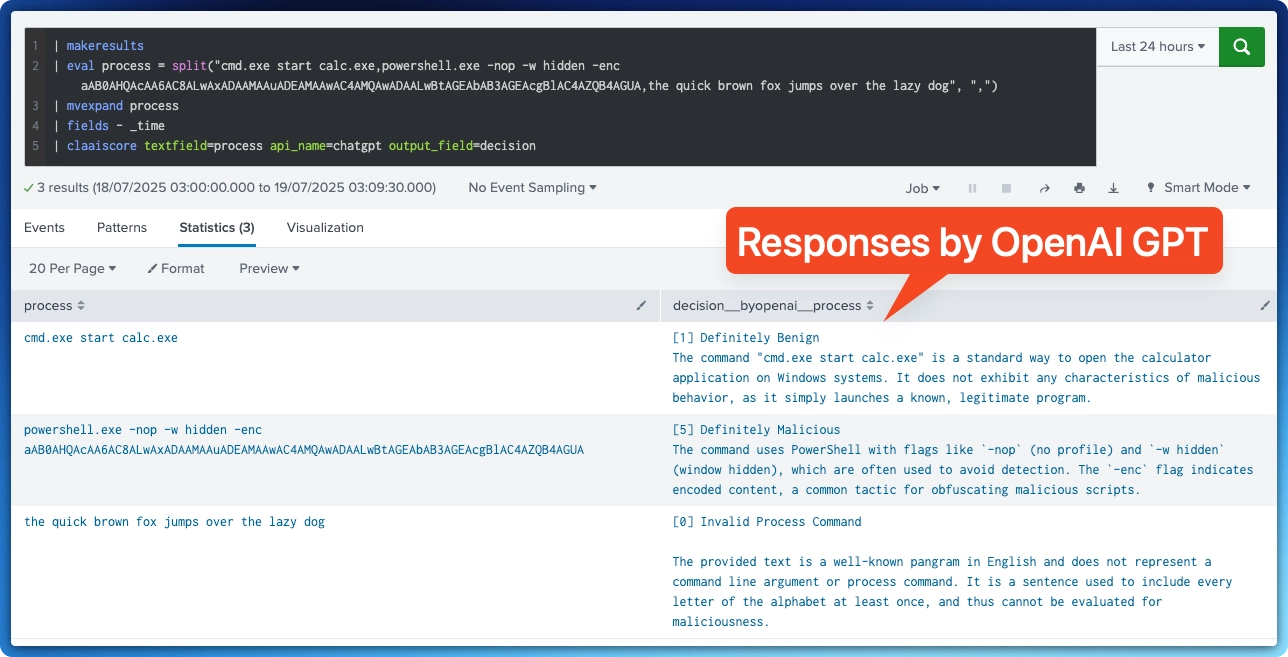

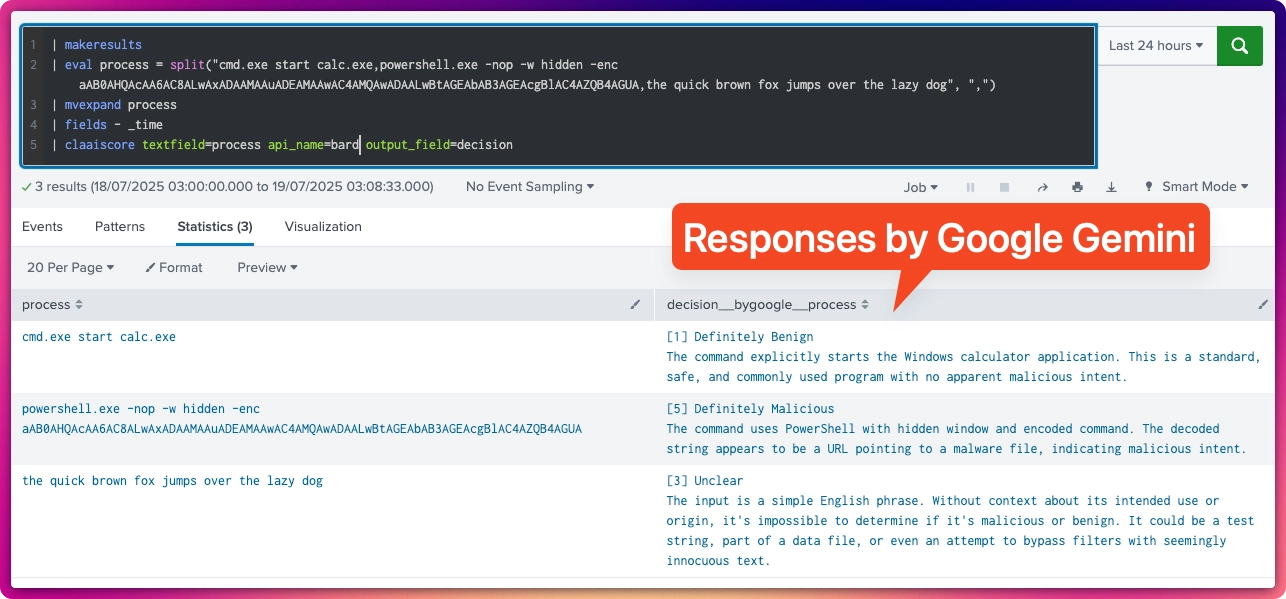

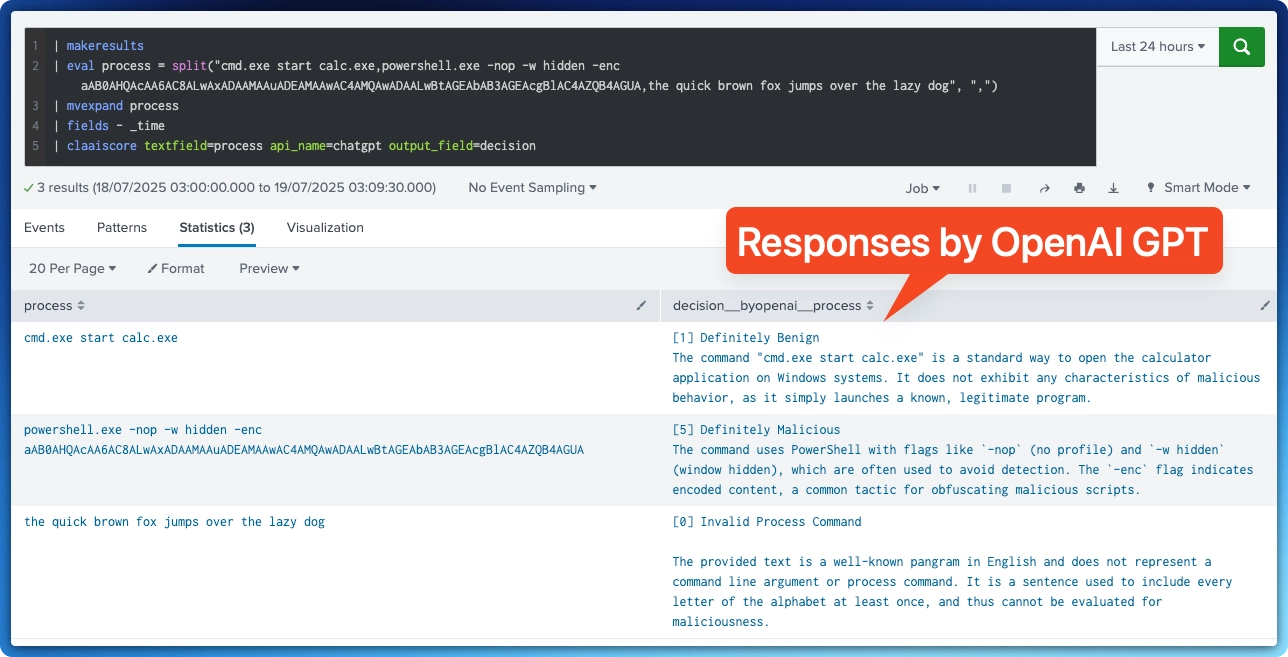

The custom command accepts a field that contains a valid Command Line Argument, e.g.: powershell.exe -nop -w hidden -enc aAB0AHQAcAA6AC8ALwAxADAAMAAuADEAMAAwAC4AMQAwADAALwBtAGEAbAB3AGEAcgBlAC4AZQB4AGUA

It will ask the chosen AI model to scrutinize the command and will respond with a Likert-type score:

[5] Definitely Malicious

[4] Possibly Malicious

[3] Unclear

[2] Likely Benign

[1] Definitely Benign

[0] Invalid Process Command

and a short explanation of why it chose that score. It integrates directly into Splunk searches via a custom streaming command and leverages LLMs' ability to read between the lines — at scale, without fatigue.

This app comes with an authorize.conf that defines a role called "can_run_claaiscore". Only users with Admin and this role can view the app and run the command. However the custom command may be ran everywhere as it is exported globally.

| your_search_here

| claaiscore textfield=process api_name=my-openai-key

ai_mal_score__by<llm provider, i.e.: openai | google>__<name of the input textfied>)| tstats max(_time) as _time from datamodel=Endpoint.Processes where Processes.process_name="lsass.exe" by Process.user Process.process

| rename Process.* as *

| claaiscore textfield=process api_name=chatgpt-expires-aug2025 output_field=decision

| fields _time user process decision__byopenai__process

| where match(decision__byopenai__process, "\[[45]\].+Malicious")

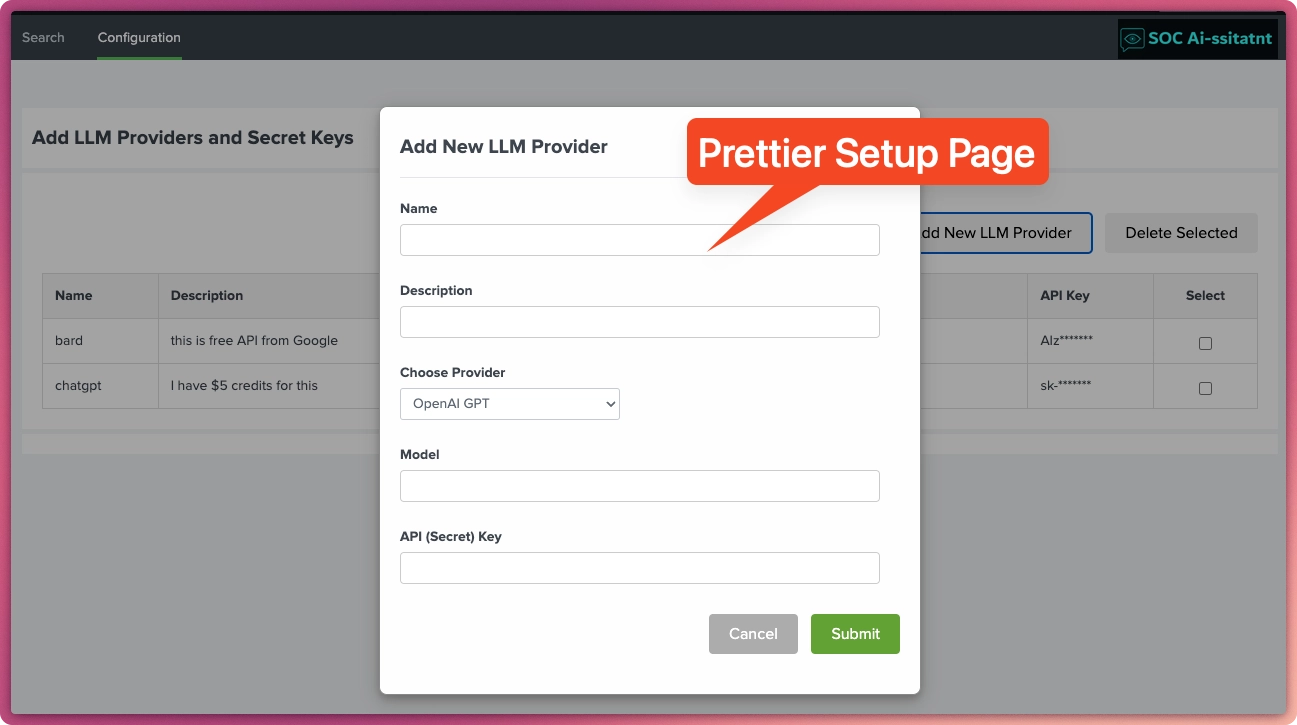

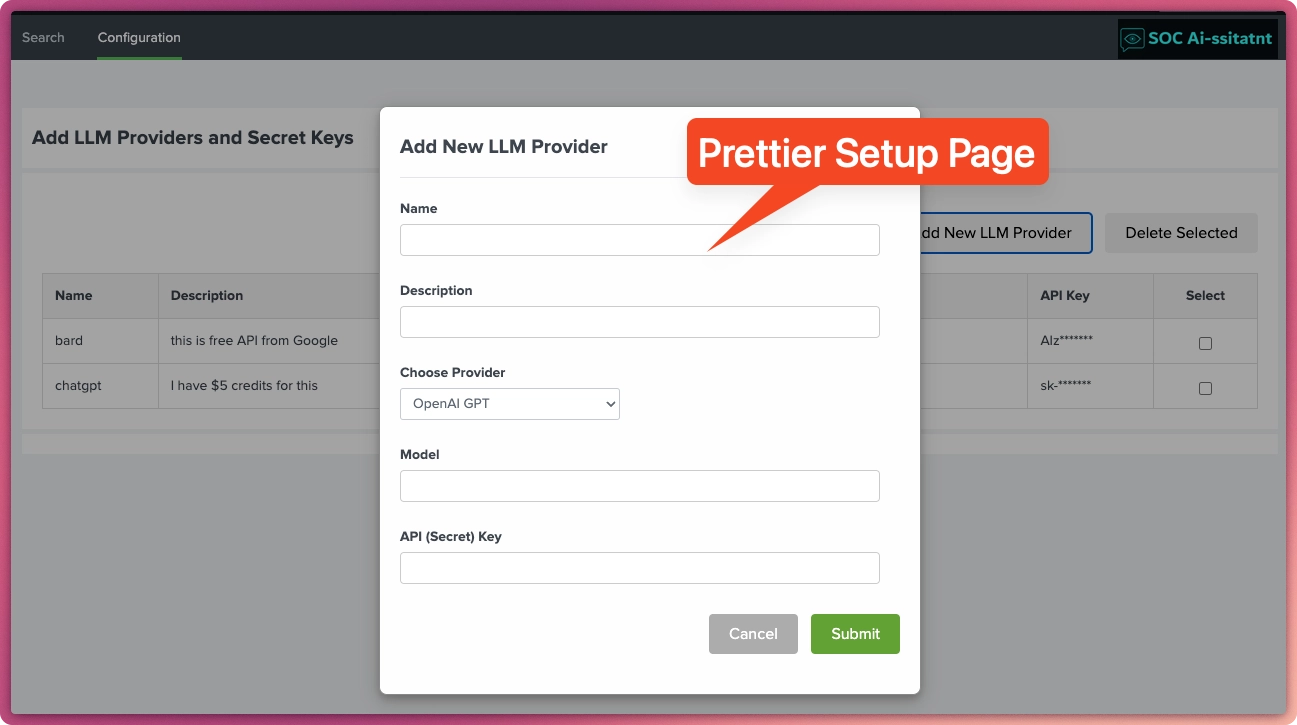

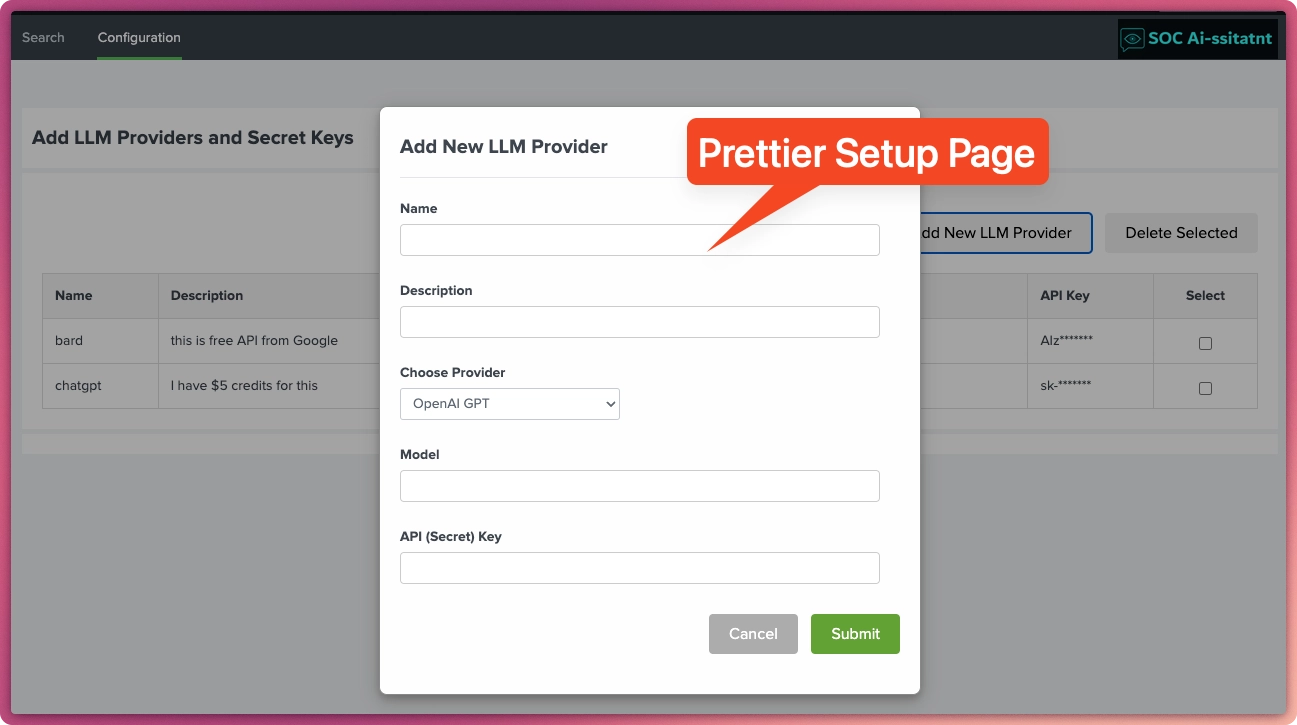

now has Google Gemini LLM provider and a prettier setup page

This project started as a way to sharpen my Python and JavaScript skills while riding the LLM/AI wave — and it's been a wild, rewarding ride so far.

To be clear: this isn't meant to replace Splunk MLTK's | ai prompt=<your prompt> command. If you're looking for a general-purpose LLM interface inside Splunk, MLTK 5.6's | ai is still the gold standard.

🛡️ A custom command focused solely on evaluating command-line arguments — and scoring them from 1 (benign) to 5 (malicious).

Houses a custom Splunk command that queries OpenAI's GPT to assess whether a process' command-line argument (CLA) appears malicious.

As a Splunkbase app developer, you will have access to all Splunk development resources and receive a 10GB license to build an app that will help solve use cases for customers all over the world. Splunkbase has 1000+ apps from Splunk, our partners and our community. Find an app for most any data source and user need, or simply create your own with help from our developer portal.